云计算-虚拟化-概念

1. 云计算经历了这样一个过程

v1.0 — 以计算为核心,kvm,hyper-v,xen, vmware exi,提高资源利用率

v2.0 — 以资源为核心,openstack,vmware, aws,基础设施云化,资源服务标准化、自动化

v3.0 — 以应用为核心,Docker,CoreOS,Cloud Foundry,应用云化,敏捷应用开发与生命周期管理

2. 云计算类型:

—IaaS – 基础设施

—PaaS – 平台

—SaaS – 软件

3.云计算关键技术:

—虚拟化

—分布式存储

—数据中心联网

—体系结构:用户界面,服务目录,管理系统,部署工具,监控,服务器集群

4.云计算部署:

—存储云

—医疗云

—教育云

—交流云

—金融云

5.虚拟化

云计算:一种服务

虚拟化:一种计算机资源管理技术,将各种IT实体资源抽象、转换成另一种形式的技术都是虚拟化

1)虚拟化类型

—拉居虚拟化, virtualbox, vmvare workstation

—裸金属虚拟化, VMware ESX, Xen, FusionSphere,虚拟化层内核需要开发

—混合虚拟化, KVM

2)虚拟化层架构:

—全虚拟化, kvm

—半虚拟化,Xen

—硬件辅助虚拟化

容器:实现APP与操作系统的解耦

6.计算虚拟化

—CPU虚拟化

——cpu QoS:份额、预留、限额

——NUMA

—内存虚拟化

——全虚拟化,影子页表技术:每个VM维护一个页表,记录虚拟内存到物理内存的映射,由VMM提交给MMU进行转换,VM不需要改变。但是这种方式是固定好的区域分配给虚拟机的

——-半虚拟化,页表写入法:每个VM创建一个页表并向虚拟化层注册,VM运行过程中不断管理、维护该页表

——-硬件辅助虚拟化, Intel的EPT, AMD的NPT

——-内存复用:内存气泡、内存共享、内存交换

—IO虚拟化

——全虚拟化,性能不高

——由Hypervisor提供接口,需要修改内核

——硬件辅助虚拟化,IO直通技术,SR-IOV 单根IO虚拟化

——IO环,用来提升大块多队列类型的IO密集型业务的IO性能

—策略

——虚拟机HA

——DRS,动态资源调度

——DPM,分布式电源管理,低负载是迁移到一个主机,节能

——IMC,集成存储控制器,在不同类型CPU类型主机之间切换

7.存储虚拟化

把多个存储介质通过一定技术将它们集中起来,组成一个存储池,并进行统一管理。这种将多种、多个存储设备统一管理起来,为用户提供大容量、高数据传输性能的存储系统,称为虚拟存储。

作用:

—–提高硬件资源使用效率,异构的管理

—–简化系统管理

—–增强云存储平台的可靠性

存储资源:

—DAS

—NAS

—SAN

存储设备:

—本地磁盘

—LUN

—Storage存储池

—NAS共享目录

数据存储

—表示虚拟化平台中科管理的存储逻辑单元,承载虚拟机业务,创建磁盘

存储模式:

—非虚拟化存储

—虚拟化存储

—裸设备映射

虚拟化实现方法:

—基于主机的存储虚拟化,单主机访问多存储, das, san

—基于存储设备的虚拟化,多主机访问同一磁盘阵列, SAN

—基于网络的存储虚拟化,多对多,异构整合

存储虚拟化功能:

—精简磁盘和空间回收

—快照

——ROW写时重定向,原磁盘+差分卷共同挂载,读时读原元磁盘,写时写差分卷(个人觉得这里有问题)

——COW写时拷贝,写时写元磁盘(元磁盘已经更新过了),读时同时同时读原磁盘和差分卷

——WA随机写

——快照链

——链接克隆

虚拟机磁盘文件迁移

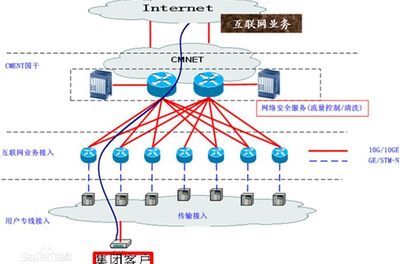

8. 网络虚拟化

目的:

—节省物理主机的网卡资源,并且可以提供应用的虚拟网络所需要的L2-L7层网络服务

—网络虚拟化软件提供逻辑上的交换机和路由器(L2-L3),逻辑负载均衡器,逻辑防火墙(L4-L7)等,且可以以任何形式进行组装,为虚拟机提供一个完整的L2-L7层的虚拟网络拓扑。

特点:

—与物理层解耦合

—网络服务抽象化

—网络按需自动化

—多租户网络安全隔离

网卡虚拟化 :

—软件网卡虚拟化

—硬件网卡虚拟化,SR-IOV

虚拟化化软件交换机

—OVS,Open vSwitch

—虚拟机之间的通信

—虚拟机和外界网络的通信

网络虚拟化 :

—链路虚拟化:虚链路聚合,二层虚拟化

——-VPC,Virtual Port Channel,虚链路聚合

——-隧道协议, GRE,通用路由封装;IPsec,internet协议安全

—虚拟网络,由虚拟链路组成的网络

——层叠网络(虚拟二层延伸网络)

———–Overlay Network, 在现有网络基础上搭建另外一种网络

———–允许对没有IP地址标识的目的主机路由信息虚拟扩展局域网,大二层的虚拟网络技术

———–vxlan,

——虚拟专用网络

vxlan和sdn是什么关系?

一、VLAN介绍VLAN,是英文VirtualLocalAreaNetwork的缩写,中文名为”虚拟局域网”,VLAN是 一种将局域网(LAN)设备从逻辑上划分(注意,不是从物理上划分)成一个个网段(或者 说是更小的局域网LAN),从而实现虚拟工作组(单元)的数据交换技术。 VLAN这一新兴技术主要应用于交换机和路由器中,但目前主流应用还是在交换机之中 。不过不是所有交换机都具有此功能,只有三层以上交换机才具有此功能,这一点可以查 看相应交换机的说明书即可得知。VLAN的好处主要有三个: (1)端口的分隔。即便在同一个交换机上,处于不同VLAN的端口也是不能通信的。这 样一个物理的交换机可以当作多个逻辑的交换机使用。 (2)网络的安全。不同VLAN不能直接通信,杜绝了广播信息的不安全性。 (3)灵活的管理。更改用户所属的网络不必换端口和连线,只更改软件配置就可以了。 二、VXLAN介绍什么是VXLANVXLAN全称VirtualeXtensibleLAN,是一种覆盖网络技术或隧道技术。VXLAN将虚拟机发出的数据包封装在UDP中,并使用物理网络的IP/MAC作为outer-header进行封装,然后在物理IP网上传输,到达目的地后由隧道终结点解封并将数据发送给目标虚拟机。为什么需要Vxlanvlan的数量限制 4096个vlan远不能满足大规模云计算数据中心的需求2.物理网络基础设施的限制 基于IP子网的区域划分限制了需要二层网络连通性的应用负载的部署3.TOR交换机MAC表耗尽 虚拟化以及东西向流量导致的MAC表项4.多租户场景 IP地址重叠?什么是隧道技术隧道技术(Tunneling)是一种通过使用互联网络的基础设施在网络之间传递数据的方式。使用隧道传递的数据(或负载)可以是不同协议的数据帧或包。隧道协议将其它协议的数据帧或包重新封装然后通过隧道发送。新的帧头提供路由信息,以便通过互联网传递被封装的负载数据。 这里所说的隧道类似于点到点的连接,这种方式能够使来自许多信息源的网络业务在同一个基础设施中通过不同的隧道进行传输。隧道技术使用点对点通信协议代替了交换连接,通过路由网络来连接数据地址。隧道技术允许授权移动用户或已授权的用户在任何时间、任何地点访问企业网络。 通过隧道的建立,可实现:*将数据流强制送到特定的地址*隐藏私有的网络地址*在IP网上传递非IP数据包*提供数据安全支持隧道技术好处隧道协议有很多好处,例如在拨号网络中,用户大都接受ISP分配的动态IP地址,而企业网一般均采用防火墙、NAT等安全措施来保护自己的网络,企业员工通过ISP拨号上网时就不能穿过防火墙访问企业内部网资源。采用隧道协议后,企业拨号用户就可以得到企业内部网IP地址,通过对PPP帧进行封装,用户数据包可以穿过防火墙到达企业内部网。隧道的应用虚拟专用网络具体实现是采用隧道技术,将企业网的数据封装在隧道中进行传输。隧道协议可分为第二层隧道协议PPTP、L2F、L2TP和第三层隧道协议GRE、IPsec。它们的本质区别在于用户的数据包是被封装在哪种数据包中在隧道中传输的。 三、GRE介绍GRE特点跨不同网络实现二次IP通信2.L3上面包装L33.封装在IP报文中4.点对点隧道GRE好处不用变更底层网络架构重建L2、L3通信实现不同host之间网络guest互通方便guest迁移支持网络数量扩大对于GRE遂道,缺点主要是一是增加了GRE表头会导致本应由交换机硬件来分片的变成由软件来分片(STT技术可以弥补这一点);二是GRE广播,且遂道将虚拟二层打通了,广播风暴更厉害。但对于虚机来说,因为虚拟交换机是完全能够知道虚机的IP和MAC地址的映射关系的,根本不需要通过ARP广播来根据IP找MAC地址,目前Neutron中有这类似的blueprint可以禁止广播。所以个人比较看好STT技术,因为目前openvswitch与linuxkernel还未实现STT,所以Neutron目前没有STT插件(但有VXLAN和GRE插件)。

Docker网络 overlay模式

本文翻译自docker官网:

The overlay network driver creates a distributed network among multiple

Docker daemon hosts. This network sits on top of (overlays) the host-specific

networks, allowing containers connected to it (including swarm service

containers) to communicate securely when encryption is enabled. Docker

transparently handles routing of each packet to and from the correct Docker

daemon host and the correct destination container.

When you initialize a swarm or join a Docker host to an existing swarm, two

new networks are created on that Docker host:

You can create user-defined overlay networks using docker network create ,

in the same way that you can create user-defined bridge networks. Services

or containers can be connected to more than one network at a time. Services or

containers can only communicate across networks they are each connected to.

Although you can connect both swarm services and standalone containers to an

overlay network, the default behaviors and configuration concerns are different.

For that reason, the rest of this topic is divided into operations that apply to

all overlay networks, those that apply to swarm service networks, and those that

apply to overlay networks used by standalone containers.

To create an overlay network for use with swarm services, use a command like

the following:

To create an overlay network which can be used by swarm services or

standalone containers to communicate with other standalone containers running on

other Docker daemons, add the –attachable flag:

You can specify the IP address range, subnet, gateway, and other options. See

docker network create –help for details.

All swarm service management traffic is encrypted by default, using the

AES algorithm in

GCM mode. Manager nodes in the swarm rotate the key used to encrypt gossip data

every 12 hours.

To encrypt application data as well, add –opt encrypted when creating the

overlay network. This enables IPSEC encryption at the level of the vxlan. This

encryption imposes a non-negligible performance penalty, so you should test this

option before using it in production.

When you enable overlay encryption, Docker creates IPSEC tunnels between all the

nodes where tasks are scheduled for services attached to the overlay network.

These tunnels also use the AES algorithm in GCM mode and manager nodes

automatically rotate the keys every 12 hours.

You can use the overlay network feature with both –opt encrypted –attachable

and attach unmanaged containers to that network:

Most users never need to configure the ingress network, but Docker allows you

to do so. This can be useful if the automatically-chosen subnet conflicts with

one that already exists on your network, or you need to customize other low-level

network settings such as the MTU.

Customizing the ingress network involves removing and recreating it. This is

usually done before you create any services in the swarm. If you have existing

services which publish ports, those services need to be removed before you can

remove the ingress network.

During the time that no ingress network exists, existing services which do not

publish ports continue to function but are not load-balanced. This affects

services which publish ports, such as a WordPress service which publishes port

The docker_gwbridge is a virtual bridge that connects the overlay networks

(including the ingress network) to an individual Docker daemon’s physical

network. Docker creates it automatically when you initialize a swarm or join a

Docker host to a swarm, but it is not a Docker device. It exists in the kernel

of the Docker host. If you need to customize its settings, you must do so before

joining the Docker host to the swarm, or after temporarily removing the host

from the swarm.

Swarm services connected to the same overlay network effectively expose all

ports to each other. For a port to be accessible outside of the service, that

port must be published using the -p or –publish flag on docker service create or docker service update . Both the legacy colon-separated syntax and

the newer comma-separated value syntax are supported. The longer syntax is

preferred because it is somewhat self-documenting.

table

thead

tr

thFlag value/th

thDescription/th

/tr

/thead

tr

tdtt-p 8080:80/tt orbr /tt-p published=8080,target=80/tt/td

tdMap TCP port 80 on the service to port 8080 on the routing mesh./td

/tr

tr

tdtt-p 8080:80/udp/tt orbr /tt-p published=8080,target=80,protocol=udp/tt/td

tdMap UDP port 80 on the service to port 8080 on the routing mesh./td

/tr

tr

tdtt-p 8080:80/tcp -p 8080:80/udp/tt or br /tt-p published=8080,target=80,protocol=tcp -p published=8080,target=80,protocol=udp/tt/td

tdMap TCP port 80 on the service to TCP port 8080 on the routing mesh, and map UDP port 80 on the service to UDP port 8080 on the routing mesh./td

/tr

/table

By default, swarm services which publish ports do so using the routing mesh.

When you connect to a published port on any swarm node (whether it is running a

given service or not), you are redirected to a worker which is running that

service, transparently. Effectively, Docker acts as a load balancer for your

swarm services. Services using the routing mesh are running in virtual IP (VIP)

mode . Even a service running on each node (by means of the –mode global

flag) uses the routing mesh. When using the routing mesh, there is no guarantee

about which Docker node services client requests.

To bypass the routing mesh, you can start a service using DNS Round Robin

(DNSRR) mode , by setting the –endpoint-mode flag to dnsrr . You must run

your own load balancer in front of the service. A DNS query for the service name

on the Docker host returns a list of IP addresses for the nodes running the

service. Configure your load balancer to consume this list and balance the

traffic across the nodes.

By default, control traffic relating to swarm management and traffic to and from

your applications runs over the same network, though the swarm control traffic

is encrypted. You can configure Docker to use separate network interfaces for

handling the two different types of traffic. When you initialize or join the

swarm, specify –advertise-addr and –datapath-addr separately. You must do

this for each node joining the swarm.

The ingress network is created without the –attachable flag, which means

that only swarm services can use it, and not standalone containers. You can

connect standalone containers to user-defined overlay networks which are created

with the –attachable flag. This gives standalone containers running on

different Docker daemons the ability to communicate without the need to set up

routing on the individual Docker daemon hosts.

For most situations, you should connect to the service name, which is load-balanced and handled by all containers (”tasks”) backing the service. To get a list of all tasks backing the service, do a DNS lookup for tasks.service-name.

SDWAN设备的互联是什么意思

SDWAN设备的互联是SAWAN在互联网连接上的可用性。

_DWAN的核心是定义,网络空间上定义出不同的资源,并用可编程的方式进行创建,管理与分派。

_DWAN可以用作虚拟网络。NFV(网络功能虚拟化)的概念提供了从专用WAN边缘客户端设备向自动化和编排转变的机会。 还可以视化网络管理页面,且有利于云访问。

感谢您的来访,获取更多精彩文章请收藏本站。

![表情[woshou]-国际网络专线](https://urenkz.com/wp-content/themes/zibll/img/smilies/woshou.gif)

![表情[yangtuo]-国际网络专线](https://urenkz.com/wp-content/themes/zibll/img/smilies/yangtuo.gif)

![表情[xiaoyanger]-国际网络专线](https://urenkz.com/wp-content/themes/zibll/img/smilies/xiaoyanger.gif)

暂无评论内容